Only two weeks until Casual Connect Berlin! We’re super excited to participate in the Indie Prize and hope to get a lot of feedback from the show. We’ll be at booth 1014 so come by to check out the latest build and say hi if you’re attending.

Radu and I will both be there, shuffling between our booth and various meetings. We’re not just there to take part in the competition – we’re also looking to connect to publishers, investors and press.

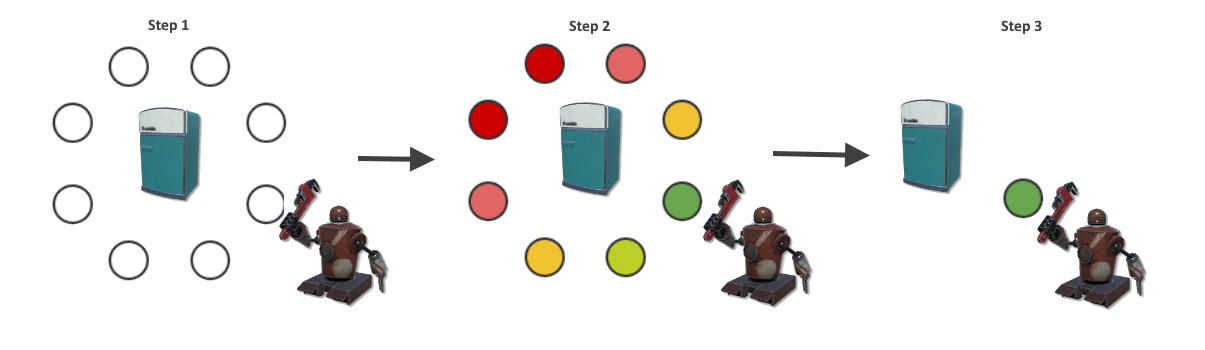

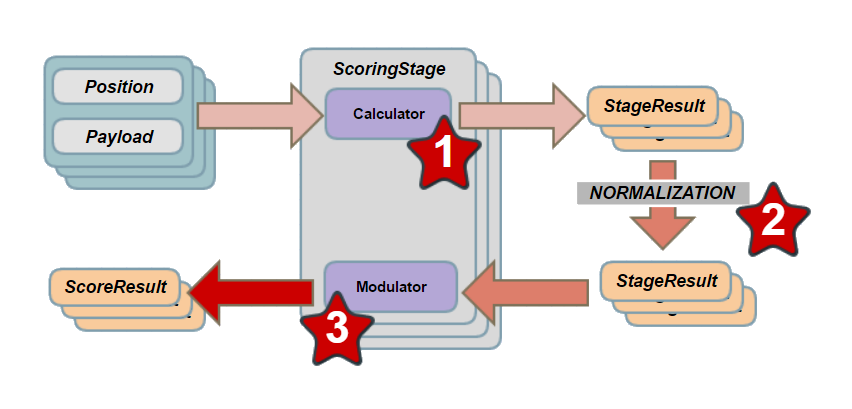

Most importantly though, we’re looking for feedback. We’ve put a lot of work since Indiecade to expand the game. We’ve added two brand new systems in place which should add tactical depth to our combat system and give players more things to do (you know… other than smashing bad robots to bits). I can’t wait to see how people react to them and if they’re as fun as we think they are.

Hallo Berlin! Wir sind froh dich kennenzulernen!